How do I copy data from CPU to GPU in a C++ process and run TF in another python process while pointing to the copied memory? - Stack Overflow

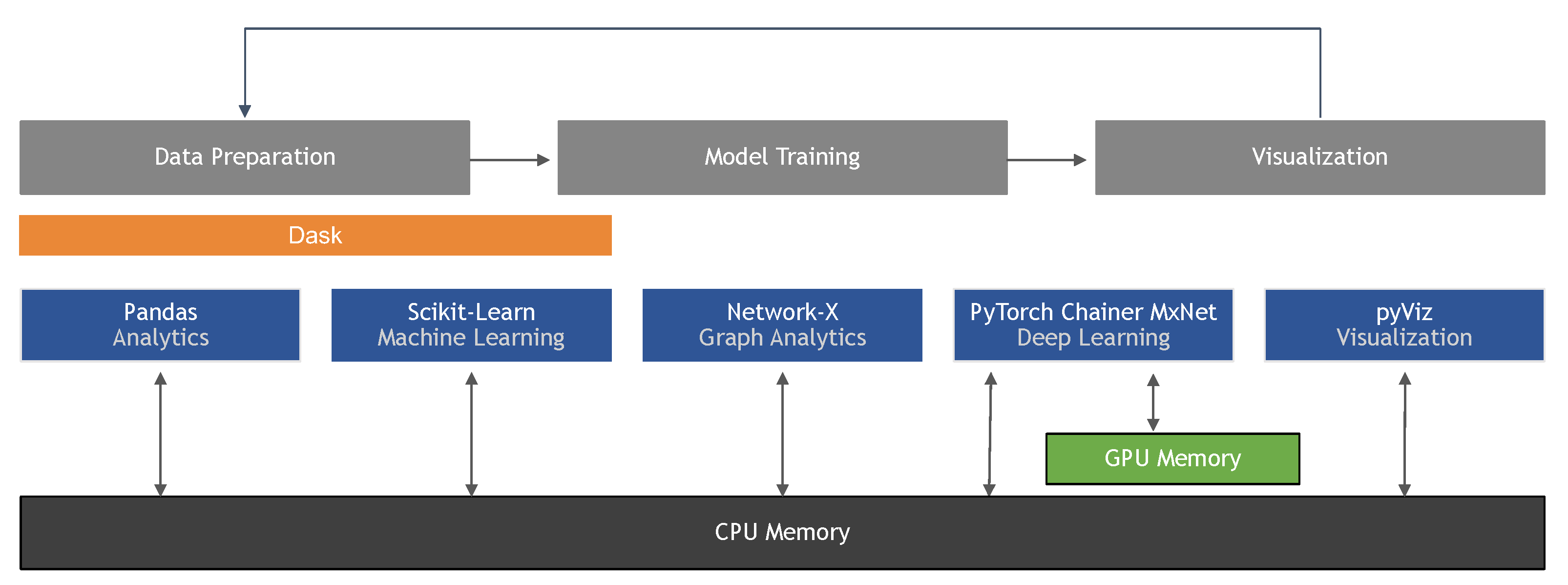

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

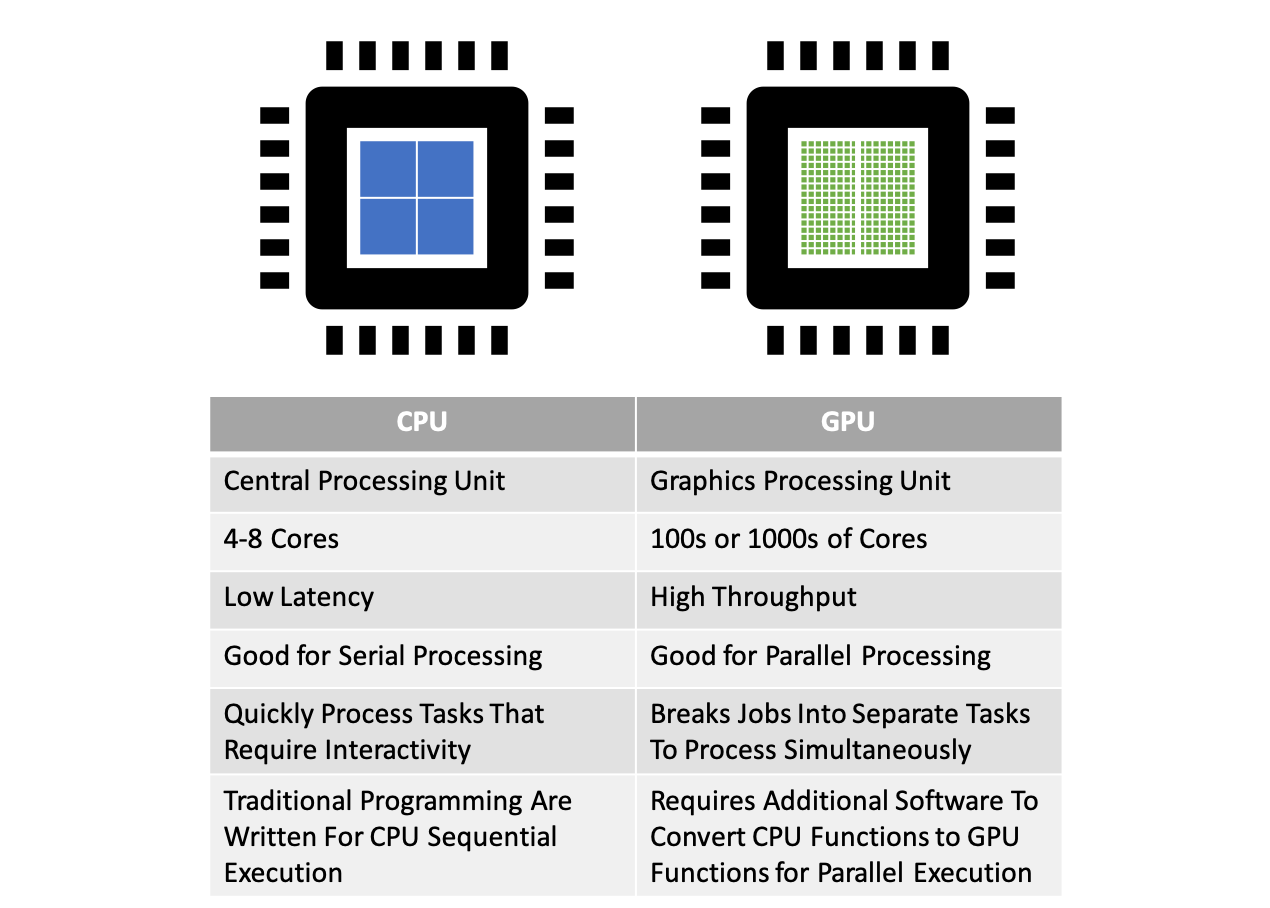

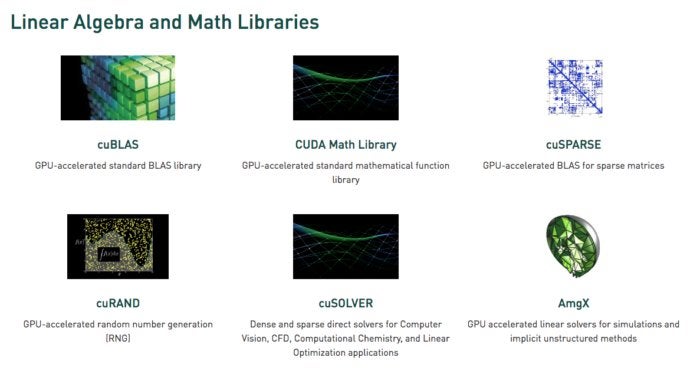

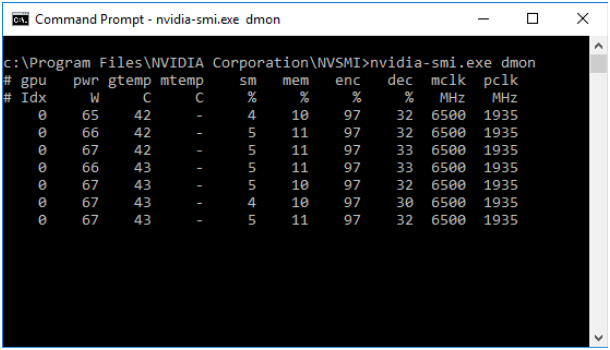

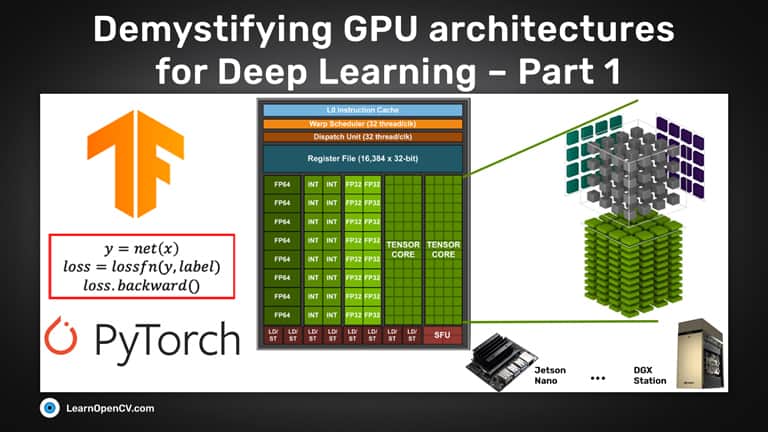

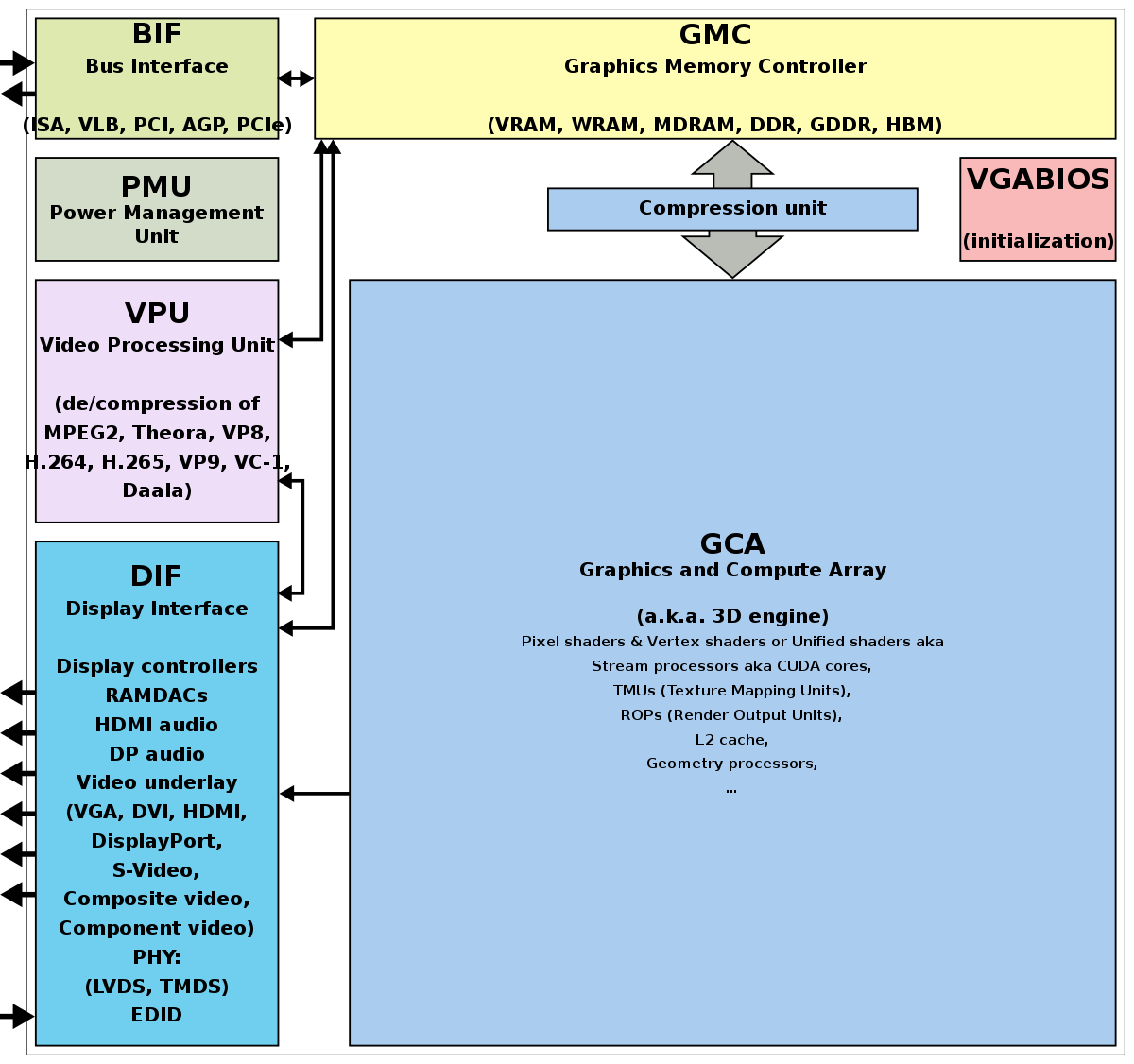

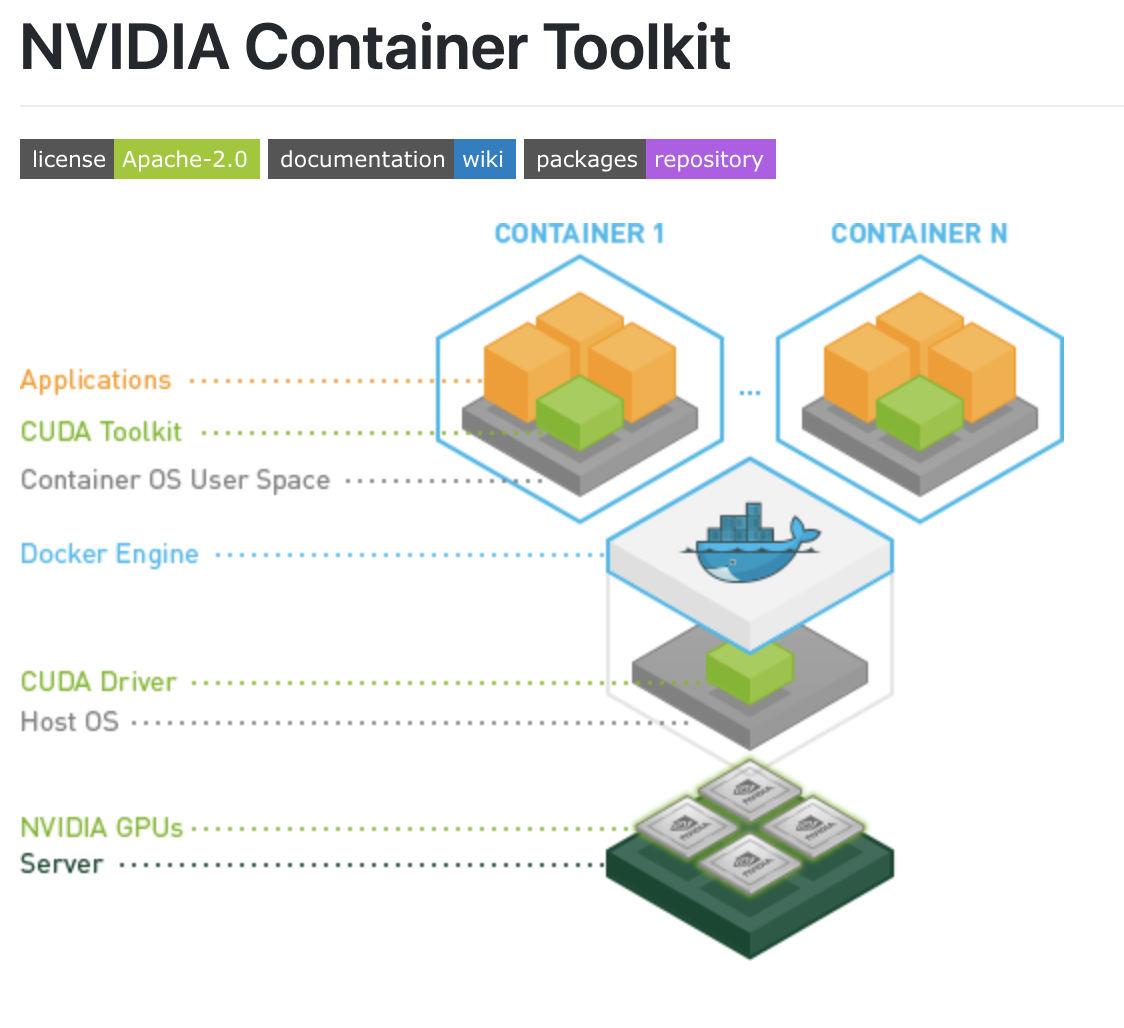

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

Productive and Efficient Data Science with Python: With Modularizing, Memory profiles, and Parallel/GPU Processing : Sarkar, Tirthajyoti: Amazon.in: Books

Accelerating Sequential Python User-Defined Functions with RAPIDS on GPUs for 100X Speedups | NVIDIA Technical Blog