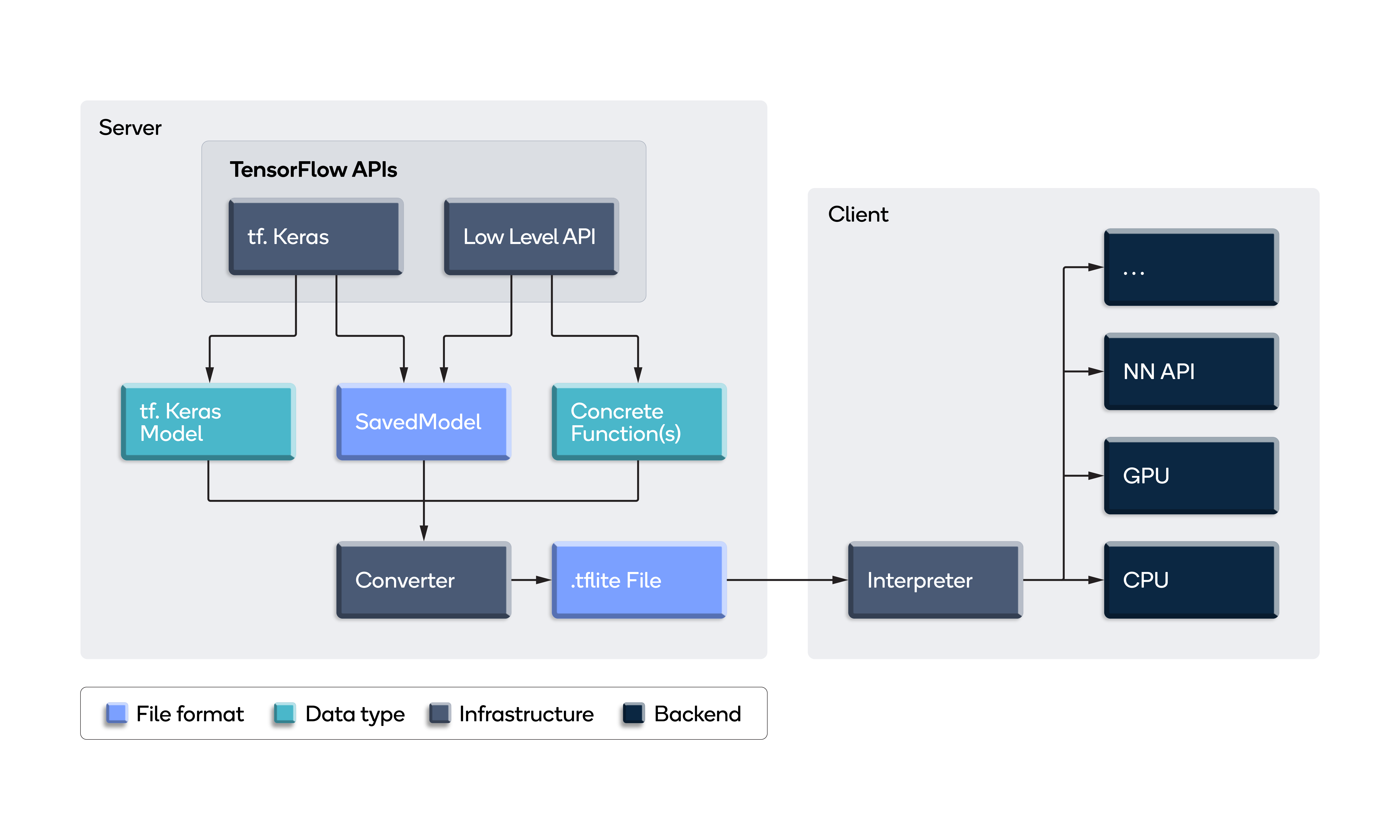

TensorFlow on Twitter: "Deploy a custom ML model to mobile 📲 In this #GoogleIO session you'll learn how to: 🟠 Integrate ML in your mobile apps 🟠 Build custom TensorFlow Lite models

Loading and running custom TensorFlow Lite models with AI Benchmark... | Download Scientific Diagram

How to use Tensorflow Lite GPU support for python code · Issue #40706 · tensorflow/tensorflow · GitHub

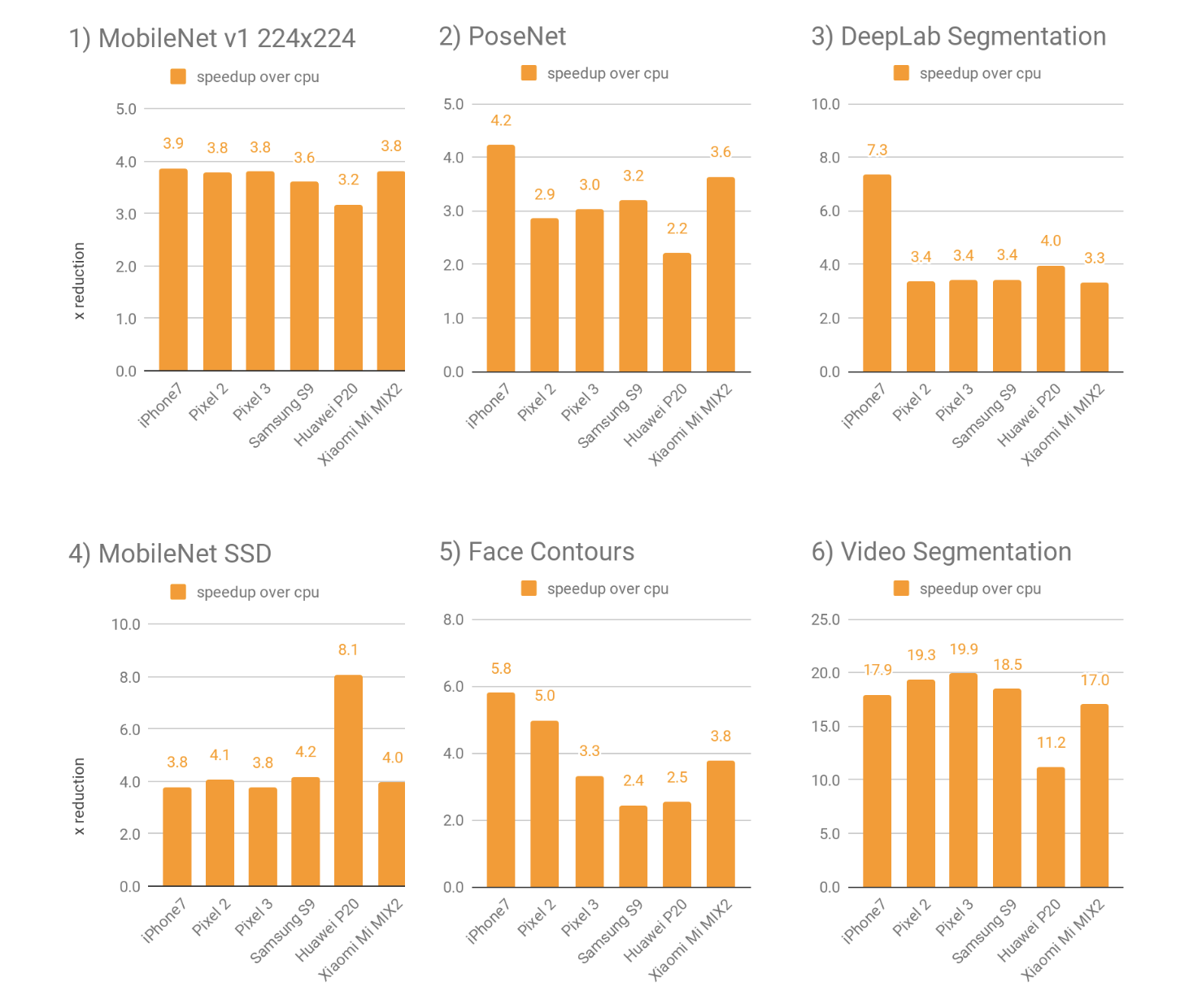

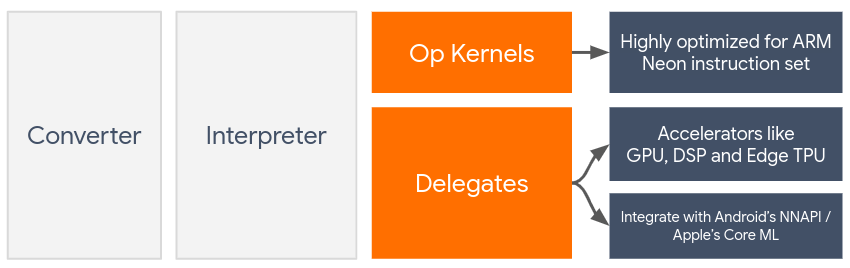

TensorFlow team releases a developer preview of TensorFlow Lite with new mobile GPU backend support | Packt Hub

TensorFlow Lite Core ML delegate enables faster inference on iPhones and iPads — The TensorFlow Blog

GitHub - terryky/tflite_gles_app: GPU accelerated deep learning inference applications for RaspberryPi / JetsonNano / Linux PC using TensorflowLite GPUDelegate / TensorRT

Applied Sciences | Free Full-Text | A Deep Learning Framework Performance Evaluation to Use YOLO in Nvidia Jetson Platform